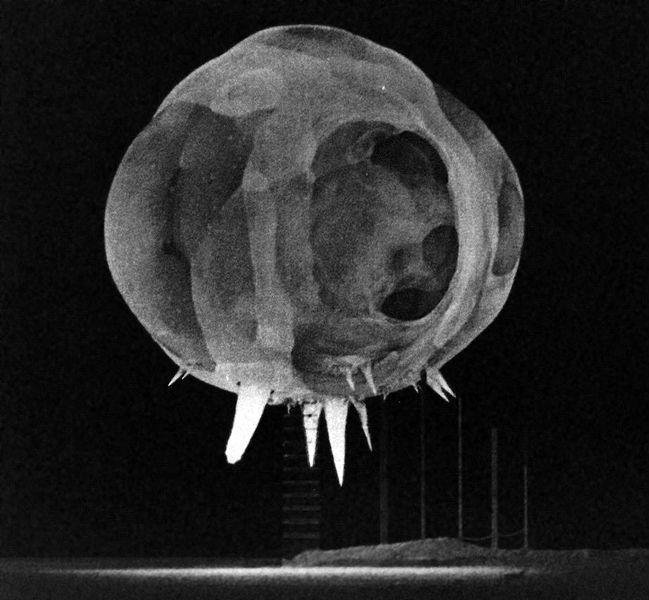

Figure 1 -Nuclear explosion photographed by rapatronic camera less than 1 millisecond after detonation. The fireball is about 20 meters in diameter. The spikes at the bottom of the fireball are due to what is known as the rope trick effect. From the Wikimedia Commons and in the public domain because the image was taken by the government of the United States.

In my blog “The eternal photograph” I spoke about photography transcending time by isolating an image in time. This isolation creates a kind of time warp. A comment by a physicist friend got me thinking about what it means to be isolating and that is what I would like to speak about today.

To a physicist a photograph is two things: a measurement and an interaction between the observer (aka photographer or camera) and the observed (aka the subject). Indeed, if you think about it the photographer uses a camera to make a measurement of the subject.

I know that most people don’t think about a photograph as a measurement, unless the purpose of taking the picture is explicitly to make a measurement. However, let’s think about the process of taking the picture just a bit. When I take a photograph one of the first things that I do is check how sharp it is, how well it has resolved the spatial elements of the picture. I am a real stickler for this and I often reject a picture in the camera before I even take it home. Why isn’t a picture sharp. Well, first there is the resolution (sharpness of the lens), then there is the pixel separation distance of the detector (or grain resolution of the film). These constitute an absolute baseline sharpness. That is to say there is a fundamental limit to how well we may place a given object in space (how well we know where it is). But then we have to worry about how much the photographer’s hand is moving (or the tripod is moving in the wind) and, for that matter, if the subject is exactly still. So to the degree that the photographer is trying to obtain a sharp image (s)he is trying to precisely say where the elements of the picture are. That’s essentially what we mean by measuring position.

You already see how motion reduces the accuracy of measuring positional information. Let’s explore this a bit further. Suppose that we take a picture of a runner moving past us. The picture tells us, to some precision, where the subject was within the exposure time. The image blurs out because of the motion. Indeed, if you measure the length of the blur and divide by the exposure time you get the velocity of the runner. Cool!

Before I go any further, you need to realize that the precision of our “measurement” of velocity depends on how well (precisely) we define the length (sounds like sharpness to me) and how well we know the shutter speed. The longer the exposure the longer the distance and the less it is limited by spatial resolution or sharpness. So to measure velocity accurately you want to increase the exposure time.

But increasing the exposure time blurs the image and makes it harder to figure out where the runner is. To increase you positional precision you want a decrease the exposure time. So the two are inversely related. Sharpness or position requires short exposure. Precise determination of velocity or speed requires long exposure. This inverse relationship between two variables is common in physics, and physicists refer to such variables as “being canonically conjugate in the Hamiltonian sense.*” I always loved that phrase. It was fun to drop it into the conversation at parties and see what happens. It is a great “pick-up line.”

This is related to but is not really an example of the Heisenberg uncertainty principle. The uncertainty principle is the recognition that there is an absolute limit to the precision (actually the product of the two precisions) that can be obtained. This applies to quantum systems. It really is an expression of the fact that you cannot measure something without perturbing it. For instance, in our discussion of positional precision I ignored the question of whether there was enough light. As you make the exposure shorter and shorter, you will eventually lose resolution and precision because you become light limited. Of course, you can crank up the light. Eventually it will perturb the subject by either blinding it, if it is a person, or burning it to a crisp, animate or not. On a small scale things are worse. Light pushes on the subject causing it to move. The more light you use the more likely you are to move it.

So all of this serves to illustrate the simple fact that there are limits to how precisely a photograph can locate or fix a subject in space. Similarly, because you have to use a finite shutter speed in taking a picture, there are limits to how precisely a photograph fixes the subject in time. 15 year old Mark Twain becomes a little less defined.

* For you physics aficionados I want to point out that position and velocity coordinates are not the true Hamiltonian conjugate variables. rather it is position and momentum, which is the product of mass and velocity. This sounds like a semantic issue, but it actually has profound physical consequences. Only geometric points or mass-less particles like the photons of light can travel at the speed of light.